Proving You’re Human: How to Solve Privacy in the Era of AI

This article proposes a privacy solution for when proof of personhood starts to proliferate across the internet, using a mechanism called ‘Sessions.’

The internet has no reliable way to verify a unique human identity.

As AI makes human impersonation increasingly convincing, this flaw has evolved from a minor oversight into an existential threat to digital trust.

Consider the AI voice clone that convinced a company to wire $25 million to fraudsters, or synthetic media that portrays events that never occurred with participants who never existed. Platforms struggle against manipulation by entities controlling thousands of fake accounts.

We need ways to verify that one human gets one account – the"proof of personhood" challenge that many projects are racing to solve. But verification alone creates a new problem: if now everyone online is verified, what happens to privacy?

Establishing unique human identities with persistent digital identities creates the perfect surveillance tool — one identifier to track you everywhere, link all your actions and control your digital existence.

Here are six key privacy issues that any proof of personhood solution must address:

1. Verification Privacy

How do we verify someone is human without compromising privacy during the verification process?

Biometrics, government IDs, and social validation involve sensitive information that, if stored improperly, creates massive honeypots. KYC (know your customer) doesn't solve the problem because any human can have as many accounts as they want, and traditional KYC systems are overly centralized and aren't secure.

2. Master Key Vulnerability

Verified systems provide users with “master keys” to control their digital identities, but this method creates a dangerous single point of failure.

A government can force you to surrender the key. A sophisticated attack can steal it. You can simply lose it.

Vitalik Buterin has noted that governments can force travelers to surrender private keys as a condition for border crossing or receiving a visa. I've experienced something close to this: when crossing a prominent border, I was once asked to provide full access to my phone. This future isn't so far away.

3. Cross-Platform Identity Linking

Without unified design, unique digital identities make it easy to track individuals across different platforms. This destroys contextual integrity.

Most of us want to present different aspects of ourselves in different contexts. In the physical world, your behavior at a party isn't visible to your employer. Likewise, we create multiple social media accounts or crypto wallets to maintain similar separation, but these approaches have their own tracking vulnerabilities.

4. Transaction Linkability

Even when using separate identifiers (like zk-based app-specific IDs) for different platforms, transactions can often be traced back to your main identity through on-chain analysis.

If you claim an airdrop with one zk-ID but transfer it to your main wallet, you've created a trackable connection.

Worldcoin's app-specific IDs partially address cross-platform linking, but don't solve this transaction linkability problem.

5. Platform-Specific Tracking

Even with separate zk-IDs for different platforms, each platform can still build comprehensive profiles by tracking all the actions within their ecosystem.

If Google knows every Gmail account, YouTube channel, and Search history belongs to the same verified human — even if they don't know which specific human — they can still build detailed profiles. This is ultimately a platform-level decision, and while we can't force platforms to change their business models, we can design systems that make it easier to maintain separation and privacy.

6. Pseudonymity Limitations

Platforms adopting proof of personhood might deprioritize unverified accounts, effectively killing pseudonymity.

As Vitalik has noted, “finsta” and “rinsta” accounts serve real purposes. Without separate but legitimate online personas, verified systems risk eliminating crucial safety valves for creativity, exploration and whistleblowing.

A Comprehensive Solution

Most proof of personhood projects address just one or two of these challenges, but a complete solution must address all six. This requires going beyond zk-based app-specific IDs. (In this piece, I won't dive into solving #1 — verification privacy — as this topic deserves its own full examination, but let’s assume it’s solved.)

We need a system that:

Verifies you're a unique human once, while preserving privacy

Allows unlinkable but verifiable sub-identities

Gives each sub-identity its own cryptographic credentials (including separate keys and wallets) that function independently while deriving from a single root identity

Prevents any entity from connecting these identities without explicit consent

Incentivizes users to keep their root identity private

Motivates platforms to utilize action-based zk-based IDs to create privacy inside the platform.

Supports private transfers between sub-ids to restrict public on-chain analysis

This would enable you to prove your humanness without revealing your identity, maintain separate profiles that can't be linked, and recover from compromise without losing your core identity.

Current approaches, including Worldcoin, fall short. Their app-specific IDs don't include separate transaction infrastructure or private transfers, leaving activities linkable. They don't adequately address the master key vulnerability, and their verification depends on specialized hardware that is difficult to decentralize.

Vitalik’s "explicit pluralistic identity” is promising, but assumes one-human-one-account systems destroy pseudonymity, overlooking key design possibilities. The effort needed to earn a reputation across multiple identities is massive, hampering adoption. It relies on platforms choosing to implement identity pluralism, a significant ask when their business models depend on comprehensive user profiles. In practice, platforms would likely default to recognizing only a user's most-connected identity, effectively collapsing multiple identities into one.

Sessions

One approach I've been exploring for the last few years is what I call "sessions" — cryptographically derived sub-identities from root identity that:

Prove you're a unique human without revealing who you are

Cannot be publicly linked to your root identity or other sessions

Inherit cryptographic proofs and credentials of a root identity

Include independent transaction capabilities

Build context-specific reputation without context collapse

Enable the creation of new own sub-sessions for completely separate actions, even further increasing privacy

Support private transfers

Can be revoked by a user

With sessions, if someone demands your master key, you can provide a session key instead, only granting access to that specific context, not your entire digital life.

If a session key is compromised, you can revoke it without losing your root identity, creating strong disincentives for identity theft. Sessions can support one-time-use session logic too.

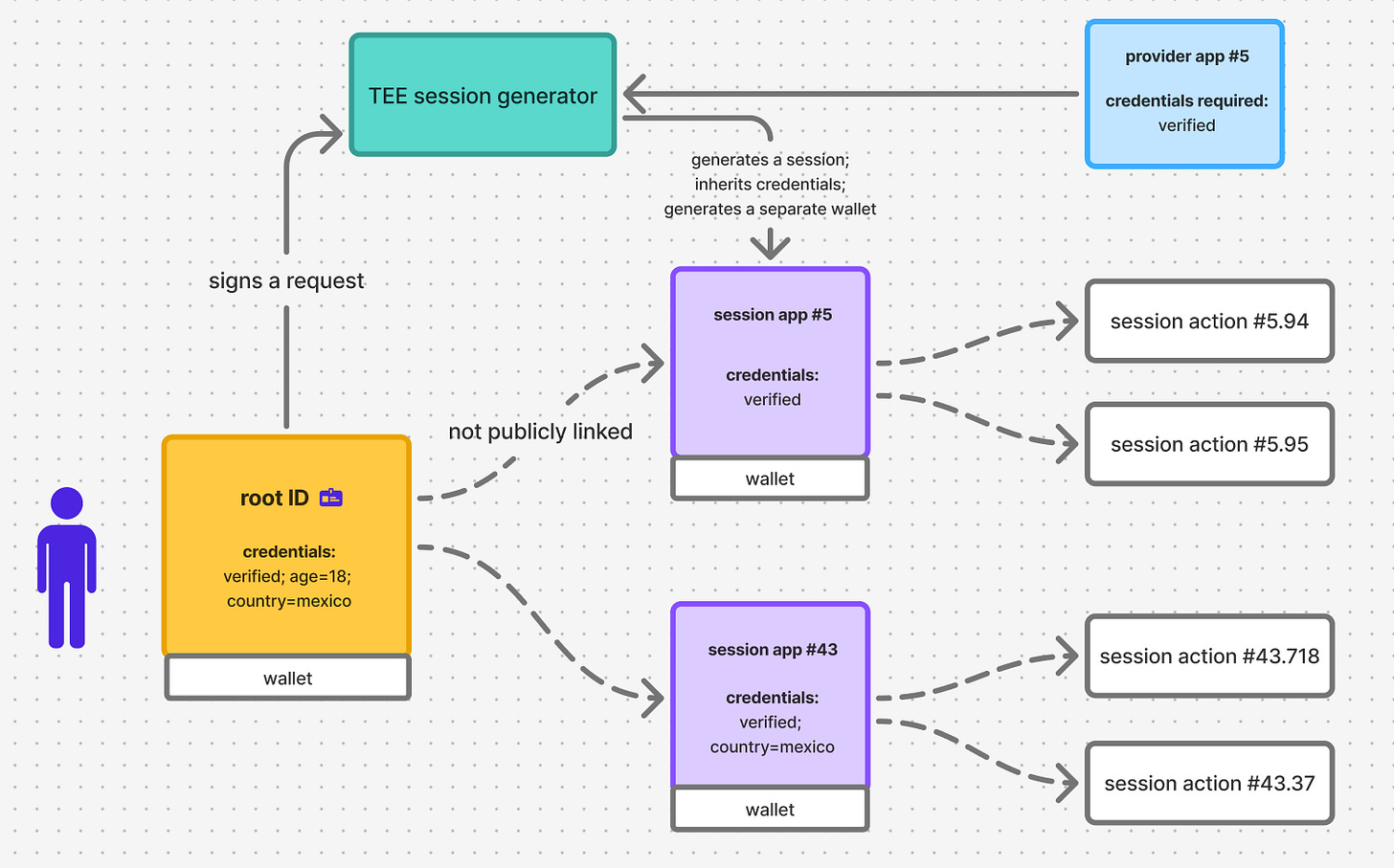

Another way to implement sessions is by using TEE infrastructure instead of zero-knowledge. If there is an MPC-based TEE certified by the network that can verify that the request to create a session is signed by the root ID owner, then the TEE can place a validation proof on-chain while keeping the user’s request private.

A “provider” Y for an app Y can define a list of required and optional credentials for session generation, along with custom logic for those credentials — for example, checking how many sessions already exist for that provider if that provider supports not just unique accounts, which automatically adds pseudonymity to the platform.

The root identity is kept private for almost all digital interactions, with most transfers and authentications are handled through sessions. This is an important design choice for clients, as it prevents ‘leaking’ unexpected attributes linked to the root ID that could be exposed through on-chain analysis and ultimately de-anonymize the user.

Limitations and Challenges

Users may struggle with managing multiple derived identities, creating UX friction. There's also a trust issue with client implementations: incorrectly developed (or malicious) apps could accidentally reveal connections between sessions or expose root identities.

Sessions also cannot prevent platforms from enforcing a one-account-per-human policy (challenge #6) if they choose to — that remains a platform-level decision. However, by making it easier for platforms to support multiple verified personas per human, we can create an environment where privacy-respecting business models win.

Closing Thoughts

In a world where AI can clone your voice after hearing five seconds of audio, proving you're human — and uniquely yourself — is becoming essential. The path forward requires verification with comprehensive privacy — solving all six challenges together.

The technology is here, but questions remain:

How do we make privacy the default rather than an option?

How do we incentivize platforms to respect identity boundaries and data ownership?

How do we balance the legitimate regulatory needs with individual privacy?

What governance structures ensure these systems evolve to protect users rather than exploit them?

These are the questions I’m currently exploring... If you're working to solve any of these, my DMs are open.

Kirill Avery is intensively building privacy-preserving digital identity infrastructure, more news is coming soon.

We're both thinking along the same lines of privacy-preserving unique identity and should collab on this.

I created BrightID as a public good to address this problem, but it never grew beyond the Ethereum ecosystem. Maybe I was too early 7 years ago and now is the right time to go mainstream.

Here's how BrightID addresses the six key privacy issues you surfaced.

1. Verification privacy

Names and photos are shared peer-to-peer when you connect; they aren't stored on servers, so there's no honeypot. Their only use is to recall with whom you connected; you could use a nym and a picture of your cat. You can use a different name and photo with different connections.

I agree KYC is great for corrupt people and terrible for honest people, so it's a no-go.

Biometrics hand wave over the real problem of trust, and create a new batch of problems. I'd be happy to enumerate the problems for you, but I think you've already come to the conclusion that biometrics are not the answer.

2. Master Key Vulnerability

Your identifier is under the stewardship of a number of people you trust to be your "recovery" connections. They can create and revoke signing keys for you to let you recover from an attack or a loss.

We even created a Soulbound NFT that was claimed by 10,000 people. It had zero resale value because an owner could use social recovery to recover it into any wallet they chose. So if their wallet was stolen or they lost everything, they could still get their Soulbound NFT back. This is what Soulbound really means, not just a "non-transferable token."

3. Cross-platform identity linking

Apps never see your main identifier. They only see a contextID or appID.

4. Transaction Linkability

BrightID isn't a wallet, but allows you to generate new wallets and seed them with gas through a BrightID enabled faucet, creating new unlinked addresses.

5. Platform-Specific Tracking

Apps within a platform can each register with BrightID separately and have their own system of identifiers (appIds).

6. Pseudonymity Limitations

Let's think about this more. An app could allow you to be verified multiple times, but only once with a level 2 verification; the rest of your pseudonyms could have at most a level 1 verification, and they could be limited in number. This permits exploration in certain areas of an app but limits some areas more strictly. Because we use blind signatures, neither the app nor BrightID nodes can link your pseudonyms together. The people who verify you (who already know you) would know the number of pseudonyms you have (to impose a maximum per app), but not know the actual pseudonyms.